本文最后更新于 218 天前,其中的信息可能已经有所发展或是发生改变。

Qwen1.0,比较拉,最新的1.5在下面

下载源码

git clone https://gitee.com/qzl66/Qwen.git下载模型

下载至Qwen/Qwen/下

git clone https://www.modelscope.cn/qwen/Qwen-14B-Chat.git

git clone https://www.modelscope.cn/qwen/Qwen-7B-Chat.git创建环境

conda create -n qwen

conda activate qwen

# 清华源速度就是杠杠的

pip install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple修改代码

修改openai_api.py文件

# 530行左右修改默认端口、ip(建议0.0.0.0)、模型位置

default='Qwen/Qwen-7B-Chat',

default=8000,

default='0.0.0.0',

# 487行有个流式传输的bug

delay_token_num = max([len(x) for x in stop_words])

# 改为

if stop_words:

delay_token_num = max([len(x) for x in stop_words])

else:

delay_token_num = 0

# 如果多显卡修改:

model = AutoModelForCausalLM.from_pretrained(

args.checkpoint_path,

device_map=device_map,

trust_remote_code=True,

resume_download=True,

).eval()

# 改为(num_gpus=显卡数量):

from utils import load_model_on_gpus

model = load_model_on_gpus(args.checkpoint_path, num_gpus=4)最后直接运行

python openai_api.pyQwen1.5

下载模型

git clone https://www.modelscope.cn/qwen/Qwen1.5-7B.git创建环境

pip install vllm

conda create -n Qwen1.5 python=3.9 -y

conda activate Qwen1.5

# Install vLLM with CUDA 12.1.

pip install vllm# 最后的pip包

Package Version

----------------------------- ------------

accelerate 0.28.0

addict 2.4.0

aiohttp 3.9.3

aiosignal 1.3.1

aliyun-python-sdk-core 2.15.0

aliyun-python-sdk-kms 2.16.2

annotated-types 0.6.0

anyio 4.3.0

appdirs 1.4.4

APScheduler 3.9.1

argos-translate-files 1.1.4

argostranslate 1.9.0

async-timeout 4.0.3

attrs 23.2.0

Babel 2.14.0

beautifulsoup4 4.9.3

cachelib 0.12.0

certifi 2024.2.2

cffi 1.16.0

charset-normalizer 2.1.1

click 8.1.7

cloudpickle 3.0.0

commonmark 0.9.1

crcmod 1.7

cryptography 42.0.5

ctranslate2 3.20.0

cupy-cuda12x 12.1.0

datasets 2.18.0

Deprecated 1.2.14

dill 0.3.8

diskcache 5.6.3

einops 0.7.0

exceptiongroup 1.2.0

expiringdict 1.2.2

fastapi 0.110.0

fastrlock 0.8.2

filelock 3.13.1

Flask 2.2.2

flask-babel 3.1.0

Flask-Limiter 2.6.3

Flask-Session 0.4.0

flask-swagger 0.2.14

flask-swagger-ui 4.11.1

frozenlist 1.4.1

fsspec 2024.2.0

gast 0.5.4

h11 0.14.0

httptools 0.6.1

huggingface-hub 0.21.4

idna 3.6

importlib_metadata 7.0.2

importlib_resources 6.3.1

interegular 0.3.3

itsdangerous 2.1.2

Jinja2 3.1.3

jmespath 0.10.0

joblib 1.3.2

jsonschema 4.21.1

jsonschema-specifications 2023.12.1

lark 1.1.9

libretranslate 1.3.13

limits 3.10.1

llvmlite 0.42.0

LTpycld2 0.42

lxml 5.1.0

MarkupSafe 2.1.5

modelscope 1.13.1

Morfessor 2.0.6

mpmath 1.3.0

msgpack 1.0.8

multidict 6.0.5

multiprocess 0.70.16

nest-asyncio 1.6.0

networkx 3.2.1

ninja 1.11.1.1

nltk 3.8.1

numba 0.59.1

numpy 1.26.4

nvidia-cublas-cu12 12.1.3.1

nvidia-cuda-cupti-cu12 12.1.105

nvidia-cuda-nvrtc-cu12 12.1.105

nvidia-cuda-runtime-cu12 12.1.105

nvidia-cudnn-cu12 8.9.2.26

nvidia-cufft-cu12 11.0.2.54

nvidia-curand-cu12 10.3.2.106

nvidia-cusolver-cu12 11.4.5.107

nvidia-cusparse-cu12 12.1.0.106

nvidia-nccl-cu12 2.18.1

nvidia-nvjitlink-cu12 12.4.99

nvidia-nvtx-cu12 12.1.105

oss2 2.18.4

outlines 0.0.36

packaging 23.1

pandas 2.2.1

pillow 10.2.0

pip 23.3.1

platformdirs 4.2.0

polib 1.1.1

prometheus_client 0.20.0

protobuf 5.26.0

psutil 5.9.8

pyarrow 15.0.2

pyarrow-hotfix 0.6

pycparser 2.21

pycryptodome 3.20.0

pydantic 2.6.4

pydantic_core 2.16.3

Pygments 2.17.2

pynvml 11.5.0

python-dateutil 2.9.0.post0

python-dotenv 1.0.1

pytz 2024.1

PyYAML 6.0.1

ray 2.10.0

redis 4.3.4

referencing 0.34.0

regex 2023.12.25

requests 2.28.1

rich 12.6.0

rpds-py 0.18.0

sacremoses 0.0.53

safetensors 0.4.2

scipy 1.12.0

sentencepiece 0.1.99

setuptools 68.2.2

simplejson 3.19.2

six 1.16.0

sniffio 1.3.1

sortedcontainers 2.4.0

soupsieve 2.5

sse-starlette 2.0.0

stanza 1.1.1

starlette 0.36.3

sympy 1.12

tiktoken 0.6.0

tokenizers 0.15.2

tomli 2.0.1

torch 2.1.2+cu121

torchaudio 2.1.2+cu121

torchvision 0.16.2+cu121

tqdm 4.66.2

transformers 4.39.0

transformers-stream-generator 0.0.4

translatehtml 1.5.2

triton 2.1.0

typing_extensions 4.10.0

tzdata 2024.1

tzlocal 5.2

urllib3 1.26.18

uvicorn 0.28.1

uvloop 0.19.0

vllm 0.3.3

waitress 2.1.2

watchfiles 0.21.0

websockets 12.0

Werkzeug 2.2.2

wheel 0.41.2

wrapt 1.16.0

xformers 0.0.23.post1

xxhash 3.4.1

yapf 0.40.2

yarl 1.9.4

zipp 3.18.1修改文件夹名称为qwen1.5-14b-chat,方便对接api

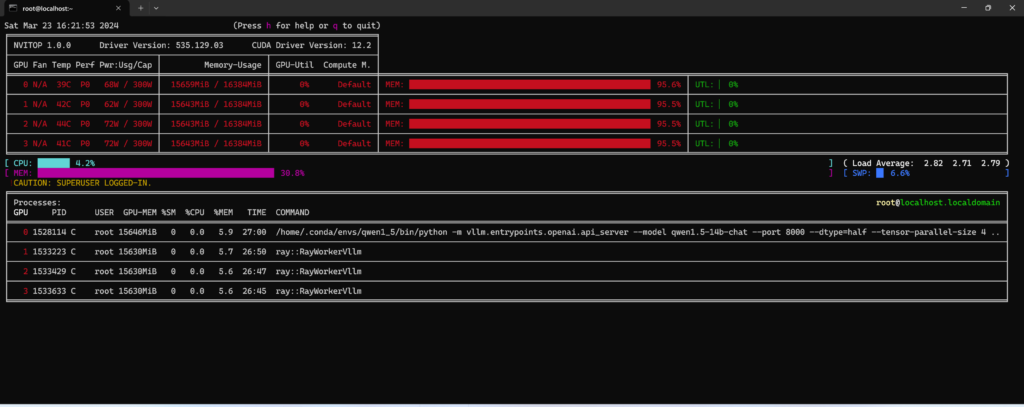

运行模型

# sh

cd /datas/ptyhon_app/Qwen1.5/Qwen

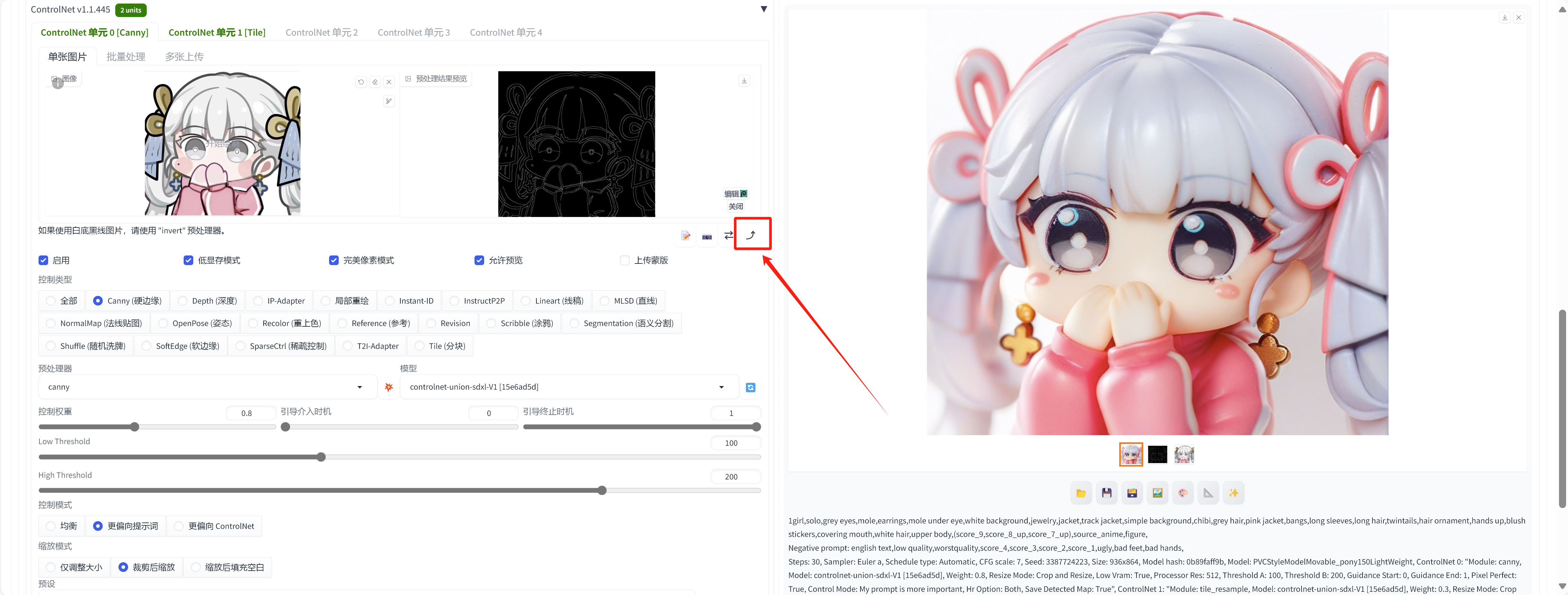

/home/.conda/envs/qwen1_5/bin/python -m vllm.entrypoints.openai.api_server --model qwen1.5-14b-chat --port 8000 --dtype=half --tensor-parallel-size 4 --gpu-memory-utilization 0.9 --max-model-len 4096好用,最后PO张图